title: Msty - Using AI Models made Simple and Easy

source: https://msty.app/

author:

published:

created: 2025-02-28

description: AI beyond just plain chat. Private, Offline, Split chats, Branching, Concurrent chats, Web Search, RAG, Prompts Library, Vapor Mode, and more. Perfect LM Studio, Jan AI, and Perplexity alternative. Use models from Open AI, Deepseek, Claude, Ollama, and HuggingFace in a unified interface.

tags:

- LLMMsty - Using AI Models made Simple and Easy

The easiest way to use local and online AI models

Without Msty: painful setup, endless configurations, confusing UI, Docker, command prompt, multiple subscriptions, multiple apps, chat paradigm copycats, no privacy, no control.

With Msty: one app, one-click setup, no Docker, no terminal, offline and private, unique and powerful features.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Using local AI models for free like the new Reasoning model from Deepseek is just a step away!

"I just discovered Msty and I am in love. Completely. It’s simple and beautiful and there’s a dark mode

and you don’t need to be super technical to get it to work. It’s magical!" - Alexe

Offline-First, Online-Ready

Msty is designed to function seamlessly offline, ensuring reliability and privacy. For added flexibility, it also supports popular online model vendors, giving you the best of both worlds.

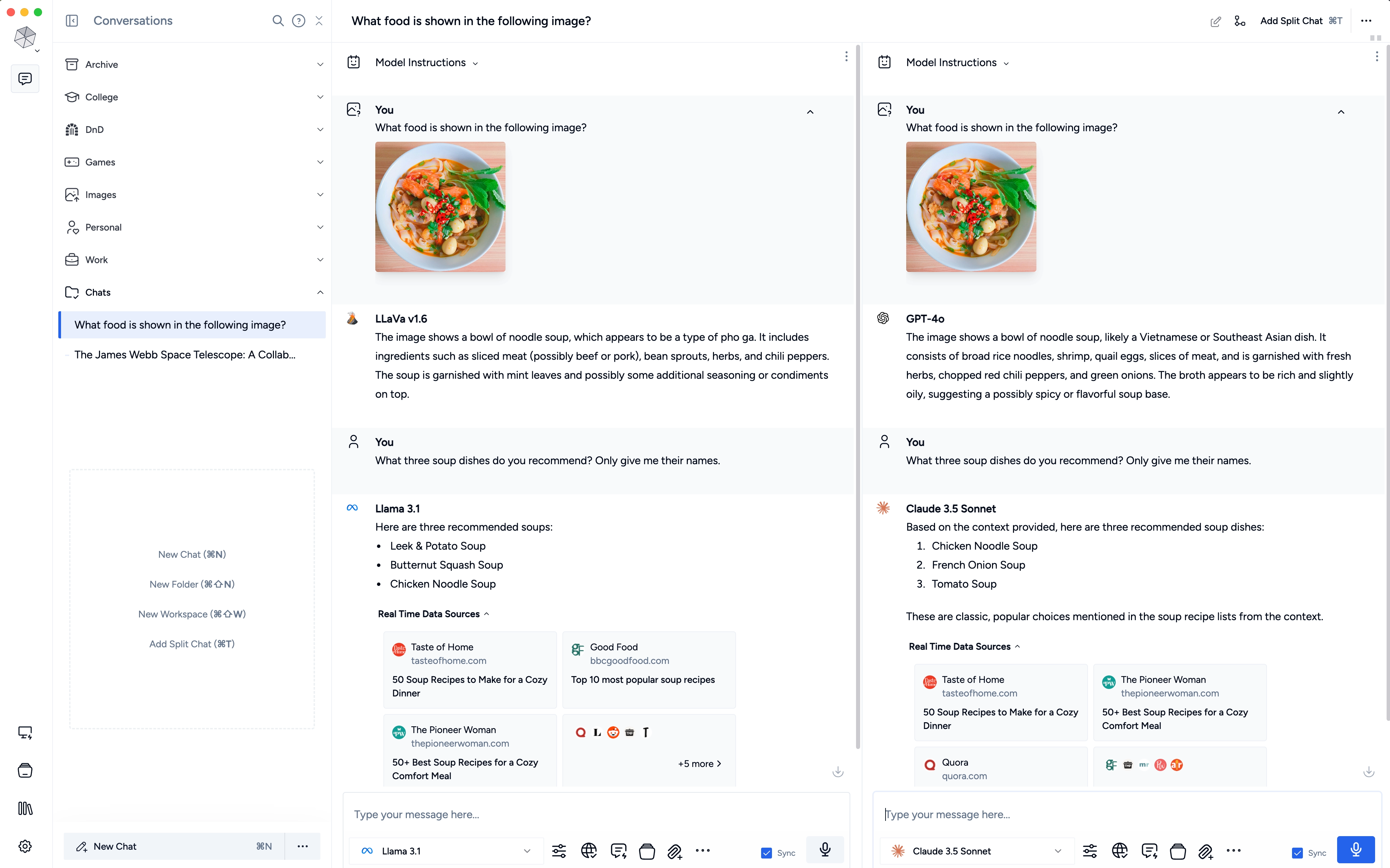

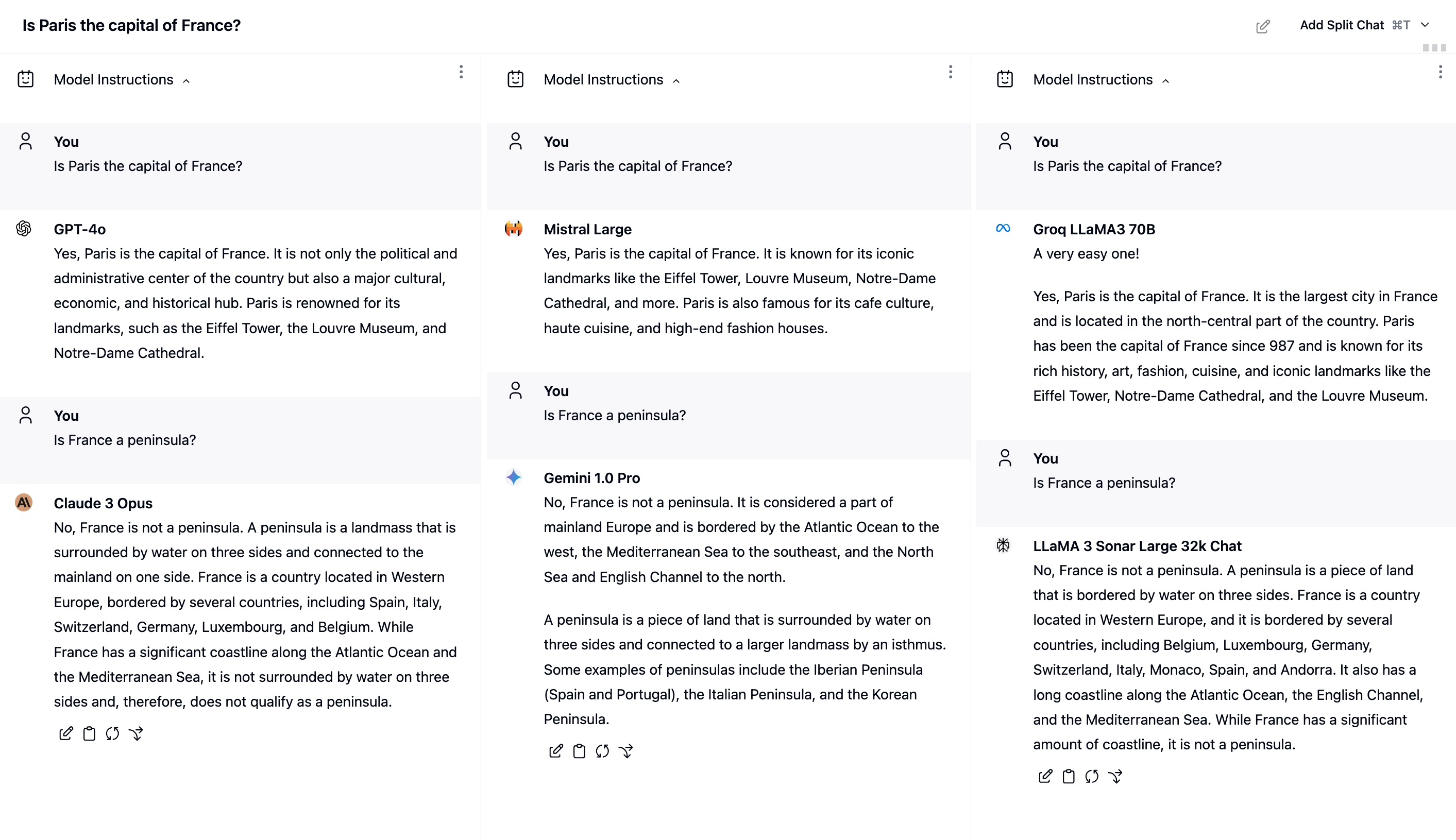

Parallel Multiverse Chats

Revolutionize your research with split chats. Compare and contrast multiple AI models' responses in real-time, streamlining your workflow and uncovering new insights.

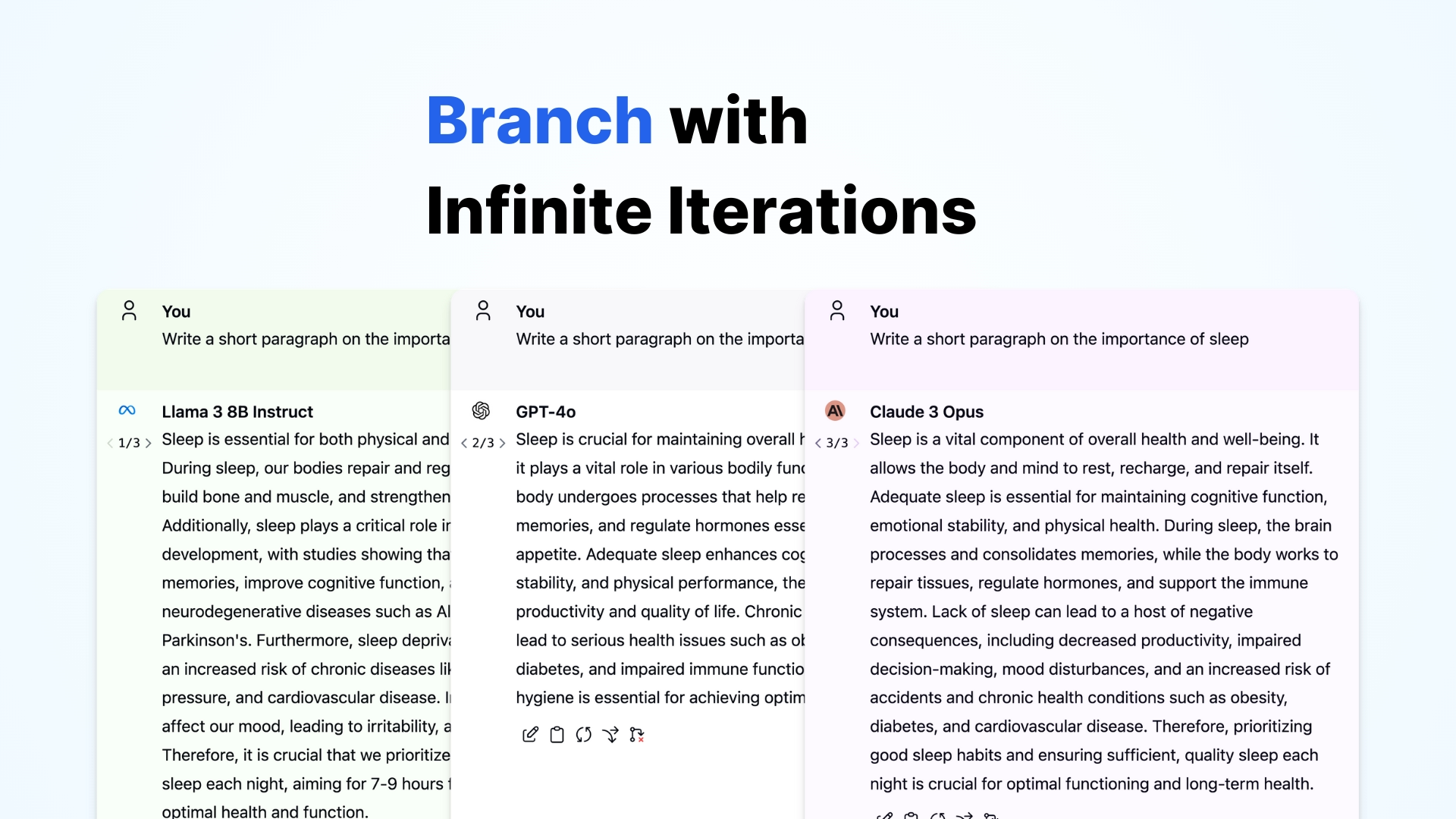

Craft your conversations

Msty puts you in the driver's seat. Take your conversations wherever you want,

and stop whenever you're satisfied.

"...Its branching capabilities are more advanced than so many other tools. It's pretty awesome." - Matt Williams, Founding member of Ollama

"...Its branching capabilities are more advanced than so many other tools. It's pretty awesome." - Matt Williams, Founding member of Ollama

Replace an existing answer or create and iterate through several conversation branches.

Delete branches that don't sound quite right.

You can also choose a different model for regeneration.

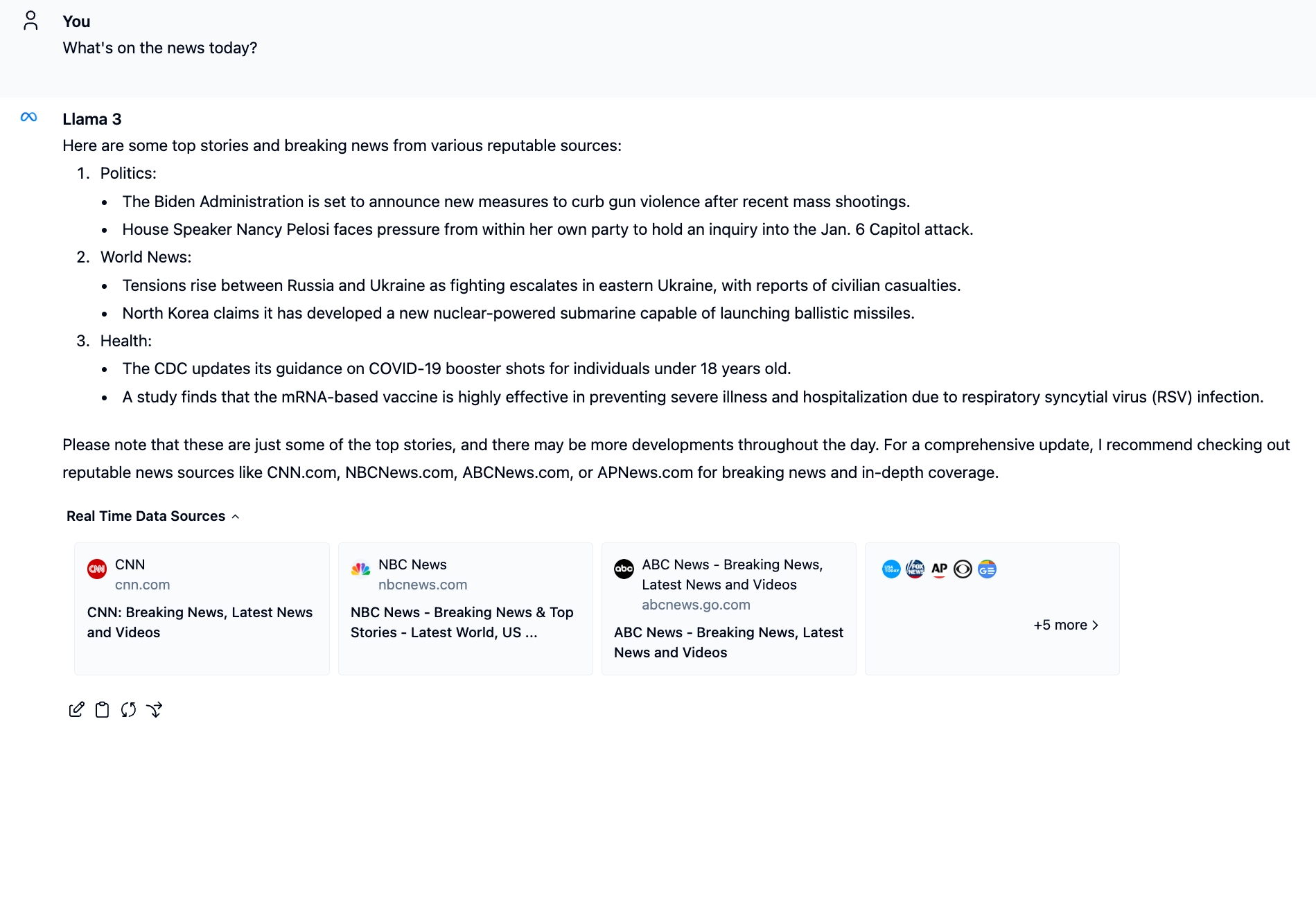

Summon Real-Time Data

Stay ahead of the curve with our web search feature. Ask Msty to fetch live data into your conversations for unparalleled relevance and accuracy.

RAG done Right.

Msty's Knowledge Stack goes beyond a simple document collection.

Leverage multiple data sources to build a comprehensive information stack.

Compose stacks using .txt, .md, .json, .jsonl, .csv, .epub, .docx files and more.

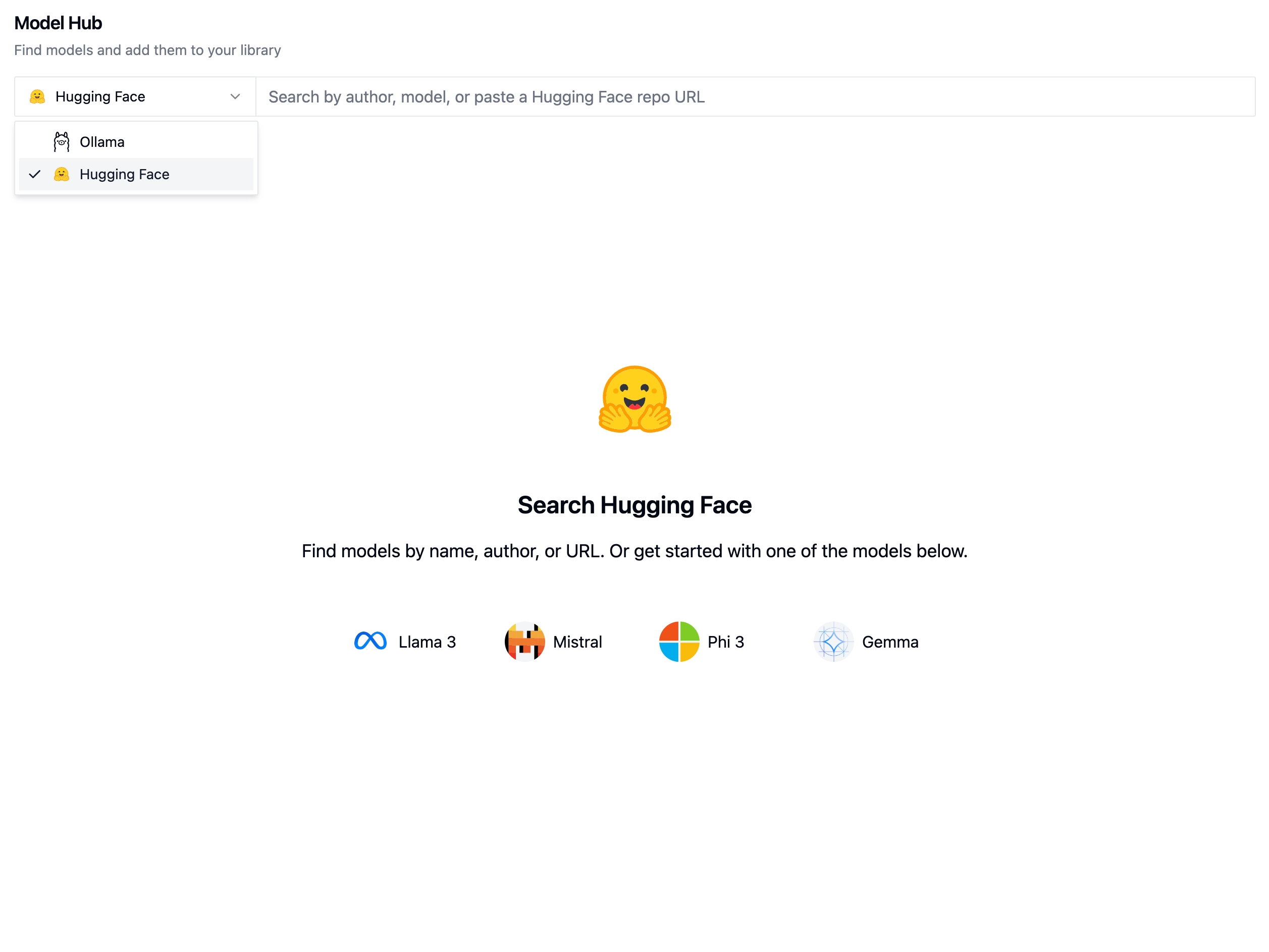

Unified Access to Models

Use any models from Hugging Face, Ollama and Open Router. Choose the best model

for your needs and seamlessly integrate it into your conversations.

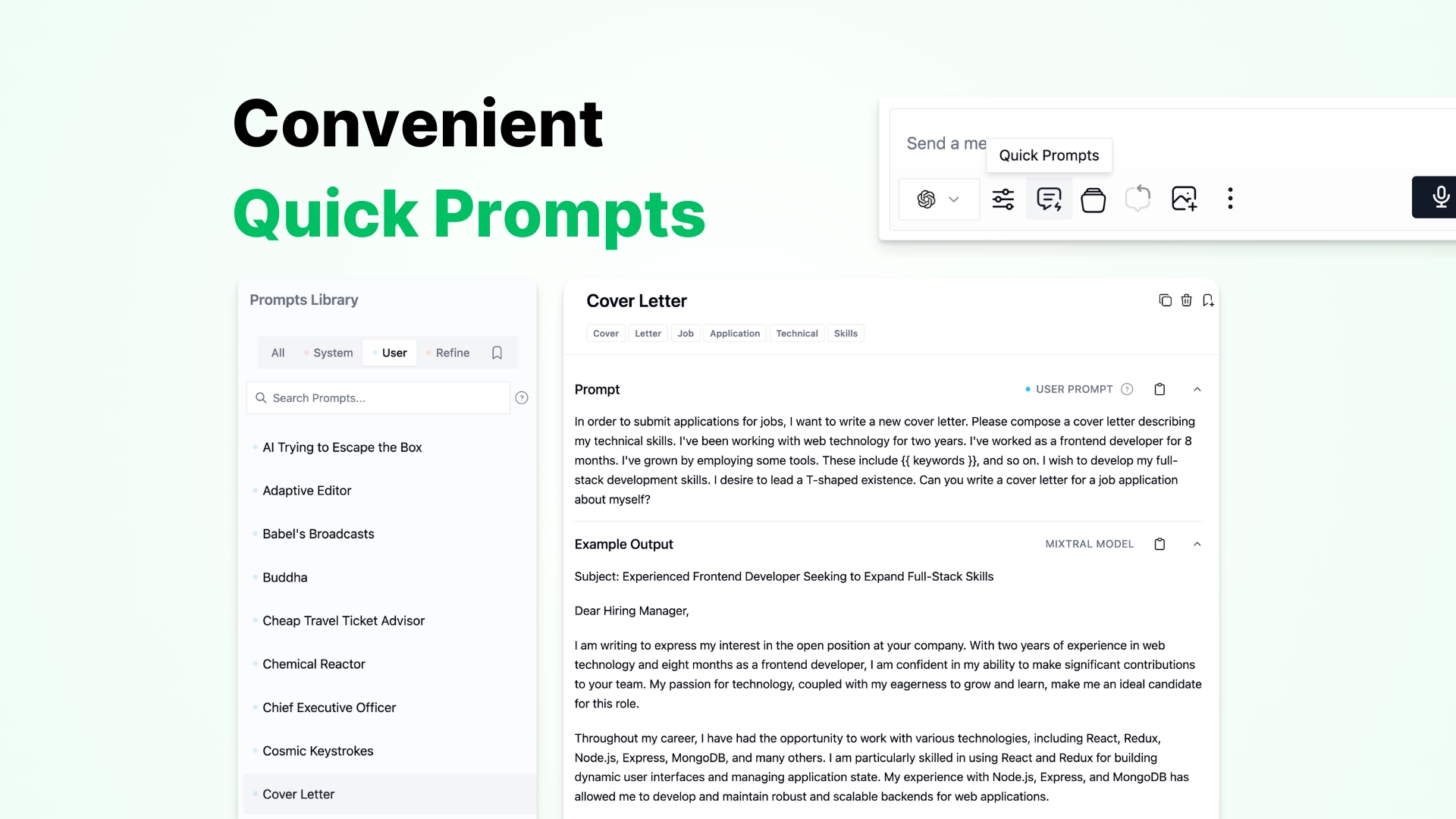

Prompt Paradise

Access a ready-made library of prompts to guide the AI model, refine responses,

and fulfill your needs. You can also add your own prompts to the library.

Use one of our 230+ curated prompts and ask away the topics you are interested in.

Find example outputs for the prompt you are using in the prompts library.

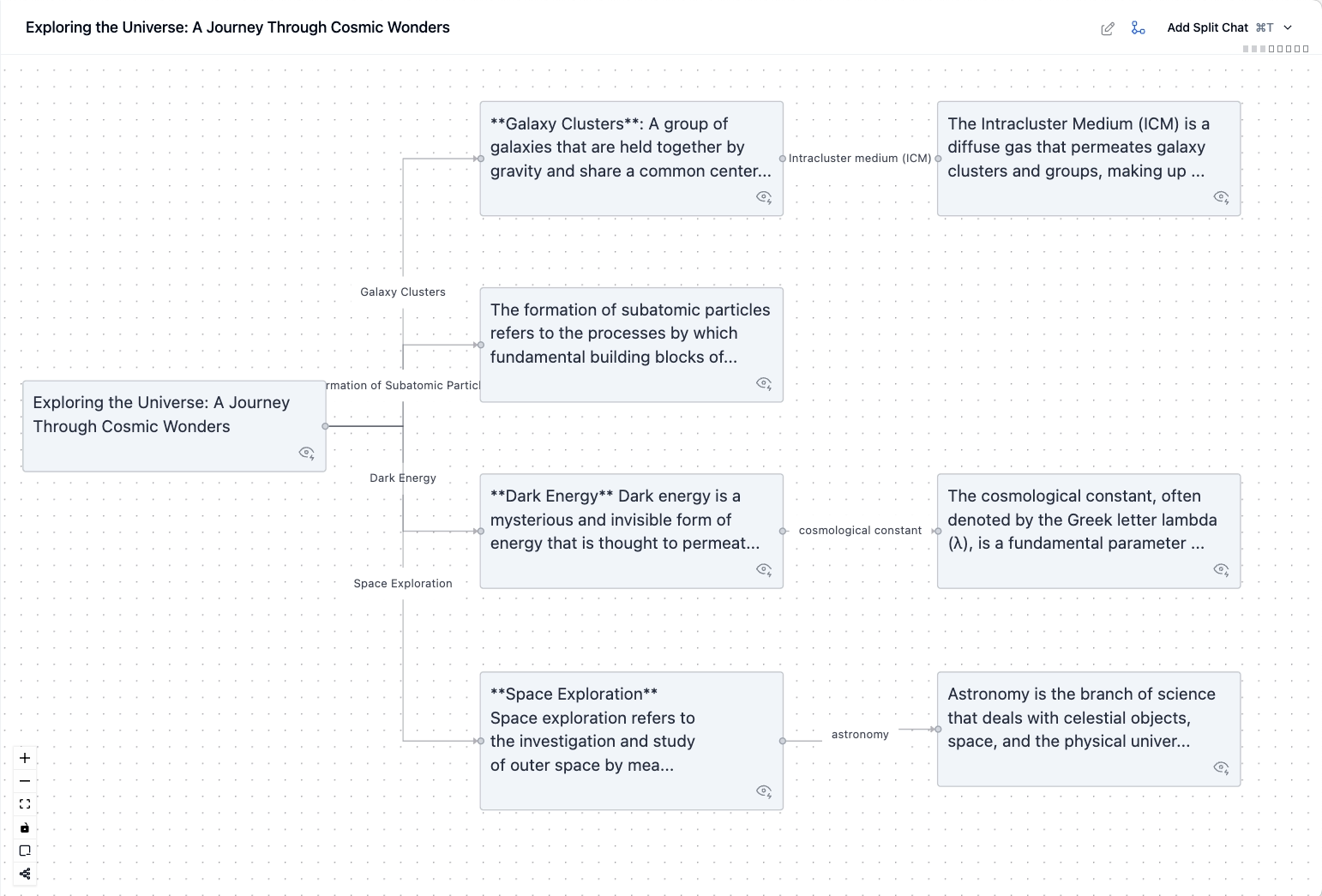

Discover & Visualize

Get ready to converse, delve, and flow! Our dynamic duo of features combines

the power of delve mode's endless exploration with Flowchat™'s intuitive visualization.

Getting lost in the rabbit holes of knowledge discovery has never been more fun!

And many more features...

Ollama Integration

Use your existing models with Msty

Ultimate Privacy

No personal information ever leaves your machine

Offline Mode

Use Msty when you're off the grid

Folders

Organize your chats into folders

Multiple Workspaces

Isolate data into their own spaces and sync across devices

Attachments

Attach images and documents to your chat

See what people are saying

Yes, these are real comments from real users. Join our Discord to say hi to them.

MSTY’s been a powerful tool for us to unlock employee efficiency while remaining model-agonistic. The combination of a great UI and powerful, accessible features like prompt libraries, knowledge files and local LLMs has been huge for our employees.

Eytan Buchman

CMO | Freightos Group

This is fantastic! I love how you've lowered the barrier to entry for us to get into working with AI.

The best Interface I've found yet to work with local models, so thank you for releasing it!

Francesco (@francesco_36500)

...arguably the most user-friendly local LLM experience available today. Msty fits the bill so well that we had to write a HOWTO post around how we got it installed on an M1 MacBook - and in that same post had to essentially disavow our previous suggestion to use ███████

I just discovered Msty and I am in love. Completely. It’s simple and beautiful and there’s a dark mode and you don’t need to be super technical to get it to work. It’s magical!

Msty has answered most of my demands for a simple local app for comparing frontier & local models and refining prompts with a great interface. Accessible to anyone who can learn to get an API key

Dominik Lukes (@techczech)

This is the client I would recommend to most users who don't want (or can't) mess around with the command prompt or Docker. So, it's already a big step forward. 👍

... of all Ollama GUI's this is definitely the best so far. Its branching capabilities are more advanced than so many other tools. It's pretty awesome.

Matt Williams (@Technovangelist)

Founding member of the Ollama team

One of the simplest ways I've found to get started with running a local LLM on a laptop (Mac or Windows). I've been using this for the past several days, and am really impressed. Whether you're interested in starting in open source local models, concerned about your data and privacy, or looking for a simple way to experiment as a developer, Msty is a great place to start.

Mason James (@masonjames)

I have tried MANY LLM (some paid ones) UI and I wonder why no one managed to build such beautiful, simple, and efficient before you ... 🙂 keep the good work!

Fantastic app - and in such a short timeframe ❤️ I have been using ███████ up until now, and (now) I prefer Msty.

I really like the app so far, so thank you. I also have ███████ installed for some other stuff, the terminal pops up with warnings, I start feeling stressed, but I like that your app is much more opaque. No terminal outputs on your screen. Very normie friendly

Must say again how fantastic and great this app is. I enjoy Msty more and more every day. I use the heck out of it. The little quality of life features are perfect, stuff i didnt even know I needed and now I can't imagine not having them.

I self-host, so having a local assistant has been on my list of to-dos for a while. I've used a number of others in this space and they all required many dependencies or technical know-how. I was looking for something that my spouse could also download and easily use. MSTY checks all the boxes for us. Easy setup (now available in Linux flavor!), local storage (security/privacy amirite?), model variety (who doesn't like model variety?), simple clean interface. The only drawback would be your computer and the requirements needed to run some of the larger more powerful models. 5/7 would download again

Chiming in to say it looks really good and professional! It’s definitely “enterprise level” in a good way, I was initially searching for a “pricing” tab lol.

My brother isn’t a coder at all and he’s gotten very into ChatGPT over the last year or so, but a few weeks ago he actually cancelled his subscription in favour of Msty. You’re making a good product dude 🙏

just came here to say i just discovered msty from the official ollama github and that ya'll are doing incredible work 🙏

Questions you may have

Ready to get started?

We are available in Discord if you have any questions or need help.